LangChain: Its Birth and Impact on the AI Industry

The rise of large language models (LLMs) like OpenAI’s GPT-4, Anthropic’s Claude, and open-source giants like LLaMA has unlocked a new wave of intelligent applications. But building these apps—from retrieval-augmented generation to autonomous agents—requires more than just calling an API.

That’s where LangChain comes in.

🚀 The Birth of LangChain

LangChain was introduced in late 2022 by Harrison Chase with a simple yet powerful goal:

Make it easier to build real-world applications on top of LLMs.

Initially written in Python, LangChain quickly grew into a flexible, extensible framework that helps developers chain together prompts, tools, memory, agents, and vector databases. What React did for frontend development, LangChain is doing for LLMs—creating composability and abstraction.

By early 2023, LangChain gained widespread adoption and saw contributions from the AI community and commercial support from LangChain Inc.

🧠 What LangChain Solves

Here’s why LangChain became essential:

-

Prompt Chaining

Easily link prompts together for multi-step reasoning workflows. -

Memory Management

Add short-term or long-term memory to make conversations feel more “aware.” -

Tool Integration

Let LLMs use tools like calculators, web search, or custom APIs via ReAct or function-calling. -

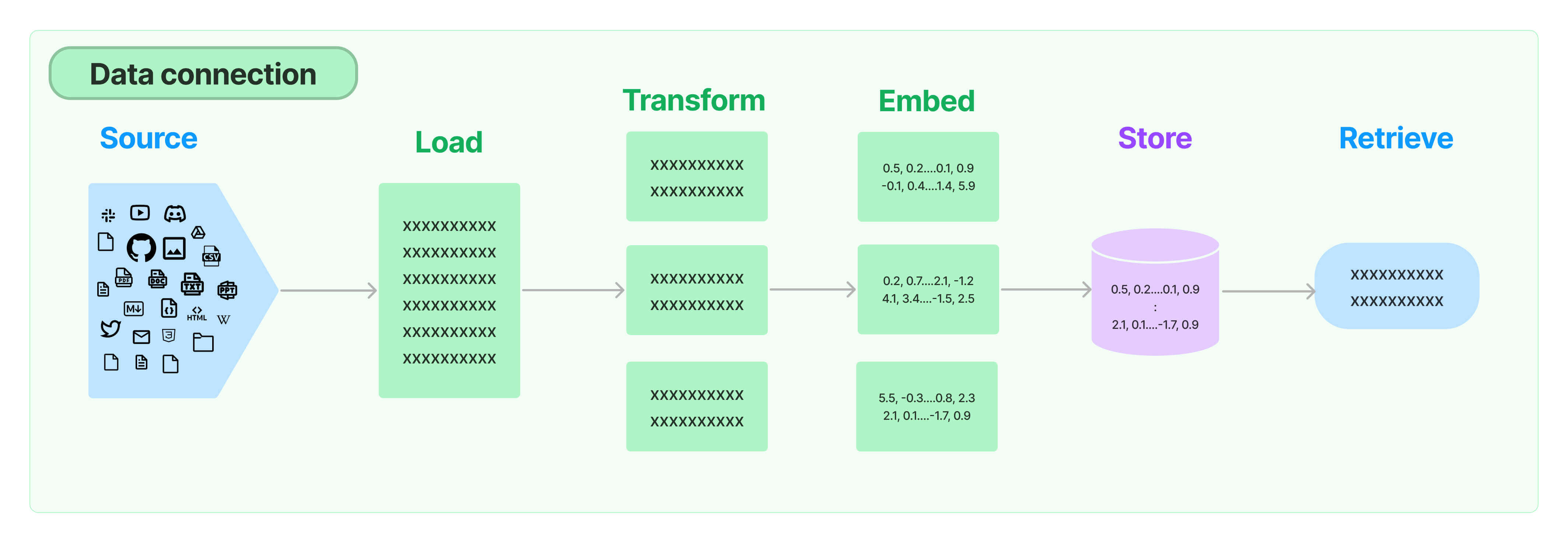

Vector Store Abstraction

Unified interface for Pinecone, Weaviate, Chroma, and others—crucial for Retrieval-Augmented Generation (RAG). -

Agent Architecture

Build autonomous agents that plan and act using LLMs, tools, and memory.

🌍 Impact on the AI Industry

LangChain has become the de facto starting point for AI engineers building:

- AI Chatbots with persistent memory and custom tool usage

- LLM-Powered Search Engines using hybrid search + summarization

- AI Agents for workflow automation (e.g. LangGraph, BabyAGI)

- Custom SaaS Tools that wrap GPT/Claude with domain-specific logic

It has also influenced the architecture of other major open-source frameworks like LlamaIndex, Haystack, and Semantic Kernel.

Big companies like Zapier, Notion, and even Microsoft have explored or integrated LangChain patterns.

🛠️ Criticism and Evolution

With its rapid growth, LangChain faced criticism around:

- Complex abstractions that sometimes felt too opinionated

- Tight coupling between modules and LLM providers

- Debuggability in production environments

The maintainers responded with improvements:

- Modularization

langchain-coreandlanggraph- Support for streaming, retries, and tracing

LangChain is still evolving, but it's laying the groundwork for “AI-native” app development.

🧭 Final Thoughts

At Sigma Forge, we see LangChain as more than a library—it’s a shift in how developers think about software.

It transforms LLMs from black-box APIs into programmable, composable reasoning engines.

Whether you're building internal tooling, customer-facing chatbots, or autonomous agents—LangChain provides the infrastructure to make LLMs reliable, maintainable, and extensible.

The AI revolution isn't just about the models—it's about how we build with them.

— Team Sigma Forge